Kubernetes 101: Building a REST API with Go

April 14th, 2024 · 15 min read

devops, kubernetes, go, rest, apiI'm starting this new series with a brief thought on the state of the web deployments today. Feel free to skip to the next section if you want to get into the development.

Over the years, I’ve been working with JavaScript technologies. React, Nextjs, Node, and many others.

And, in the past few years, there’s been a big trend in serverless deployments. Where instead of having a , you execute the code when it’s needed.

I think serverless architectures make sense for a lot of things. They’re easy to work with. You don’t need to set up your own local infra, since everything is provided by your cloud provider.

The tools I'm most productive with these days are a combination of the . It’s a famous stack popularized by Theo. It's easy to use and set up. You can start a new repo using their CLI and deploy it to Vercel/Netlify.

But, it does abstract a lot of things for the developer. You don't have to go through the pain of setting up the database connection. No need to worry about how to deploy your frontend. Or your API. We connect the GitHub repo to the PaaS and we're good to.

Yet, when I first learned about programming, serverless was not yet a thing. I remember setting up my basic environment. Running everything locally. Calling the database by using a raw connection url and SQL. Spending a lot of time battling the OS internals (and learning a lot along the way).

But, over the years, I've been using more and more serverless lately. Which means I missed a lot of the evolutions we had in traditional deployments. I haven't built any microservices architecture from scratch. Never used Kubernetes. Didn't touch much of DevOps besides what's needed for serverless. Which I think is a lot simpler.

And I have a growing interest in distributed systems. Understand how companies like Meta and Google serve billions of users with 99.99% uptime.

I have an okay knowledge about serverless. I know its use cases, its drawbacks, things to watch out for. Yet, I'm oblivious when it comes to designing a service architecture using Kubernetes.

It’s been a while since I did similar like this. Learn a new tool. Build a new application that solves a pain for me and for others, using a new architecture. And I'm curious if we can make a simple enough Kubernetes application that feels as productive as serverless.

In this scenario, efficiency matters. A lot. Which means using a software architectures that allows you to move fast. To solve problems you currently have instead of have to planning for the future.

I am productive when using full stack frameworks like Nextjs. I know I can build an application quickly. Hence, I want to see if I can do something at a similar velocity without relying on serverless. And that it can accommodate for changes quickly as I add more requirements.

But, more importantly: I'm curious. I like computers. And I want to have a deeper understanding of how they work. Serverless is abstracting too many things for me right now. So I'll step outside my comfort zone. To regain the feeling I used to have when first learning about programming.

I know plenty of companies are using to manage their services these days. I'm figuring out the why. I know it's a container orchestration tool. And that it's able to spin up containers, and replace them as they die. But I don't know anything else. 😅

By the end of this series, I want to get a better understanding of the whole k8s ecosystem.

As for Go, I've been using it more and more lately. Yet I only used it for simple coding projects. Now I want to push my limits even more. By creating a REST API that I plan to use in many projects in the future.

A disclaimer: I already started coding this project. Yet, before proceeding even more, I want to write my thought process. This accomplishes two things:

- It helps me take a step back and see where I'm currently at, and my next steps. This exercise will help me understand all the requirements. And to plan for them accordingly.

- It helps cement the knowledge I'm building with this project. By trying to teach someone, it pushes me to make sure I'm understanding everything. I think teaching is the ultimate learning tool for software engineers.

As of writing this post, I'm able to call the REST API with a HTML body in the request body. The API processes the HTML, converts it to a PDF, and then returns it. Currently, the only client is the CLI tool I'm building to generate fake invoices.

Why fake invoices you ask? I need them all the time on my job. I work on the Bill Pay team, where we scan invoices to pay them. And because of that I need different invoices. Number of line items, payment method, amount, etc.

I could create them manually. I know. But I want to over-engineer my way into automation. That's a typical fun weekend for me. Yeah I'm probably not normal. Okay, that's enough of an intro. Let's start.

Project requirements

Functional requirements:

- I'm able to convert various files into PDFs. For now, I'll start with HTMLs, since then I can build the invoice with HTML/CSS.

- I'm able to create invoices for multiple use cases. Like many line items, different payment methods, among others. We'll use HTML to create the invoice. Because it's simple to parse and change. Then we can convert the HTML to PDF with the other service.

- In the future, I want to also use this API for my own analytics service. I plan to use it for my website. It'll store my most popular content, and also likes on each article. Heavily inspired by Josh Comeau's website.

Non-functional requirements:

- We'll use Go to create the API. For now, with no frameworks. Since Go standard library is good enough.

- To manage the deployment, we'll use Kubernetes. No idea how we're going to deploy it. DigitalOcean, AWS, Google Cloud. No idea. I'll learn about them when the time comes (probably part 2 of this piece).

- Authentication. Something simple. To ensure only authorized clients can make calls to my personal API. API keys are probably enough here.

- Caching the invoice generation. The endpoint will receive the invoice parameters. If we have a similar invoice created, we can return it and avoid the conversion process.

- To implement the conversion service, we'll use Gotenberg. It's a well maintained Go library to for PDF files. It provides a developer friendly API to interact with tools like Chromium and LibreOffice. It should be enough for all kinds of conversions.

- The Gotenberg service should not be accessible outside the K8s cluster. Our clients will interact with the REST API exclusively.

There may be more details missing from this here. But I think this is a good enough list for now. We can now progress to the system design phase.

System design

Our goal here is to understand how our services will interact with each other. How the clients will call our API.

Given that I'm not familiar with K8s, my design will probably look weird. 😅

But let me try my best.

As far as designs go, this is on the simple side. Since I'm not familiar with the tech, there might a few steps I missed here and there.

But the goal, and how each component interacts with each other sounds clear enough.

Now, before moving on, one last thing. Defining our endpoints and how we'll handle the request and response payload.

API Endpoints

- POST

/api/fake-invoice. Will receive the desired invoice data. If empty, return a randomized invoice. The request payload may contain the options to generate an invoice with specific values. - POST

/api/convert/html-to-pdf. Will receive the HTML file in its payload. And will returnContent-Type: application/pdf. The PDF generated by converting the HTML.

For documenting the endpoints, we should use something like OpenAPI. It has been a long time since I implemented one of those specs. We'll do that eventually. We need to make sure our API customers are happy, and that everything they need to know is there.

Implementation

I haven't implemented any Go project bigger than one file up until now. So, no idea on the best practices. For this first version, I'll put files on where they make sense for me.

You can check my file structure in this GitHub repo.

For the PDF conversion, my plan is to use Gotenberg. It provides a friendly API to interact with tools for converting documents.

It's available as a Docker image in the registry. My plan is to build two services in my K8s cluster: one for the REST API, and another one for Gotenberg. Then, using Kubernetes DNS we can make HTTP calls from the API to the Gotenberg service in our local network.

Development workflow

When I first started this project, I ran it like this:

The usual way to run a Go project.

Yet, I want to learn K8s. So I knew I'd have to look on how to setup a k8s project. To do that, I relied on ChatGPT to guide me through the initial setup. You can see the full results about files on the previous section.

The first step for me was to my Go application.

To do that, we need to create a Dockerfile. It'll define the instructions to build the container image. Here's it:

Most commands are commented. But the summary is to go through the build steps and get the server up and running.

Now that I have my Dockerfile, I can build the container image. Which we'll use to start our K8s service.

This command builds the Docker image and stores the image in my local registry. When I run docker images, I get this:

We have our image up and running on our local registry. Now, the next step is to setup our local Kubernetes cluster.

Setting up my local Kubernetes cluster

There's a few options here. It'll vary depending on what system are you on. I'm using a MacBook Pro, then consider this if you're following along. For Ubuntu users, MicroK8S appears to be a good choice. There's also Rancher Desktop, which is cross-platform.

The most straightforward one is Docker for Mac. You can install it and enable Kubernetes using the settings. This is how I first got stated. It's the easiest one to setup.

However, I didn't know that 😅 so I went with minikube instead. I used brew install minikube to install it. Then minikube start to run the cluster.

When I first started, I was using the kubeclt commands to add my deployments to my cluster. So, this was my very first inefficient development workflow:

After this, I had my up and running.

Yet, when I tried to access localhost:8080, my API URL, nothing happened. Which is great! Why, do you ask?

With Kubernetes, our deployments are isolated from the host operational system. This is to ensure security and resource encapsulation. That means that, even if our app is running locally, we can't access it using localhost. We have to explicitly expose the Kubernetes network to ours.

And, to make a pod accessible to the outside world, that's when the service comes in. Which is why we applied them in the very first place!

Deployments, Pods and Services are among the first concepts I learned while trying out Kubernetes.

Think of deployments as a blueprint for managing pods. It describes an ideal state for it. Like which container image you want to add, and how many replicas of the pods we need to run. Kubernetes job is to keep this ideal state for as long as possible.

If we choose three replicas, that means Kubernetes is going to create three Pods. When it crashes, Kubernetes replaces it to maintain the ideal state.

The Pod itself is the smallest deployable unit in Kubernetes. It's a group of one or more containers with shared storage and network. And a spec on how to run those containers.

Pods are temporary. They can come and go. Reasons could be scaling, updates, or failures. No matter why, Kubernetes manages that life cycle for us. Ensuring they're rescheduled and maintained according to the desired state. State which is defined in the deployment.yaml file.

Services are different. They provide a network abstraction to the Pods. Because Pods are ephemeral, it's necessary to have a persistent interface to interact with them. Even if the Pods die or their state changes. And they can also help with load-balancing traffic between pods.

They provide a stable IP address and DNS name by which we can access Pods. A good metaphor is a friend's phone number: even if they change their devices, the phone number stays the same.

Another one of Kubernetes advantages are its self-healing capabilities regarding its Pods.

Kubernetes self-healing is a key feature because it keeps applications running smoothly. If something goes wrong, like a server crash, Kubernetes automatically fixes it by restarting failed parts. This means less downtime and fewer problems for users.

I built this Kubernetes Visualizer component to help visualize K8s self-healing. It showcases how, even after a Pod is killed, K8s can create new ones. And we can see that the service name stays the same, and its age doesn't change, even when all pods are down.

Now that the service ready, we need to access it in our machine. This way we can execute the program. To find out the URL we need to access, we run this command:

It's going to return the URL we can access. With this URL, I can now interact again with my API.

Now that we can access our application, let's go back to the development workflow.

When I make changes to my code, I need to see the results. That's programming 101, you probably all know that.

Before I could restart my Go server and we were good. Now, because we're working with a K8s application, it's a tiny bit more work.

In my initial workflow, I did these steps:

After finishing all these commands, everything was updated.

As you can see, those are a lot of commands. Maybe I wasn't doing everything in the ideal way, deleting pods. It doesn't matter. What mattered to me is that I didn't have a great, hot reload-enabled workflow. So I had to find out a better way. That could bring my productivity to the same level as doing NextJS/Node work.

After doing some research, I found a great answer: Tilt.

Improving local development velocity: Tilt

A toolkit for fixing the pains of microservice development. — Tilt definition from its website.

Tilt fixed all problems about my slow development workflow.

It gave me automatic code compilation whenever I made changes.

It gave me a great dashboard to check the logs and the status of each service.

I went from running all those commands to executing only one.

Check this quick demo here:

You can see that it works with localhost as well. This is all set up on the Tiltfile. Which we'll talk about next in the setup.

For setting up Tilt, I decided to go ahead and install Docker for Mac. This way I used the Kubernetes inside Docker, since it is easier to setup.

To install it, run one of these commands based on your OS:

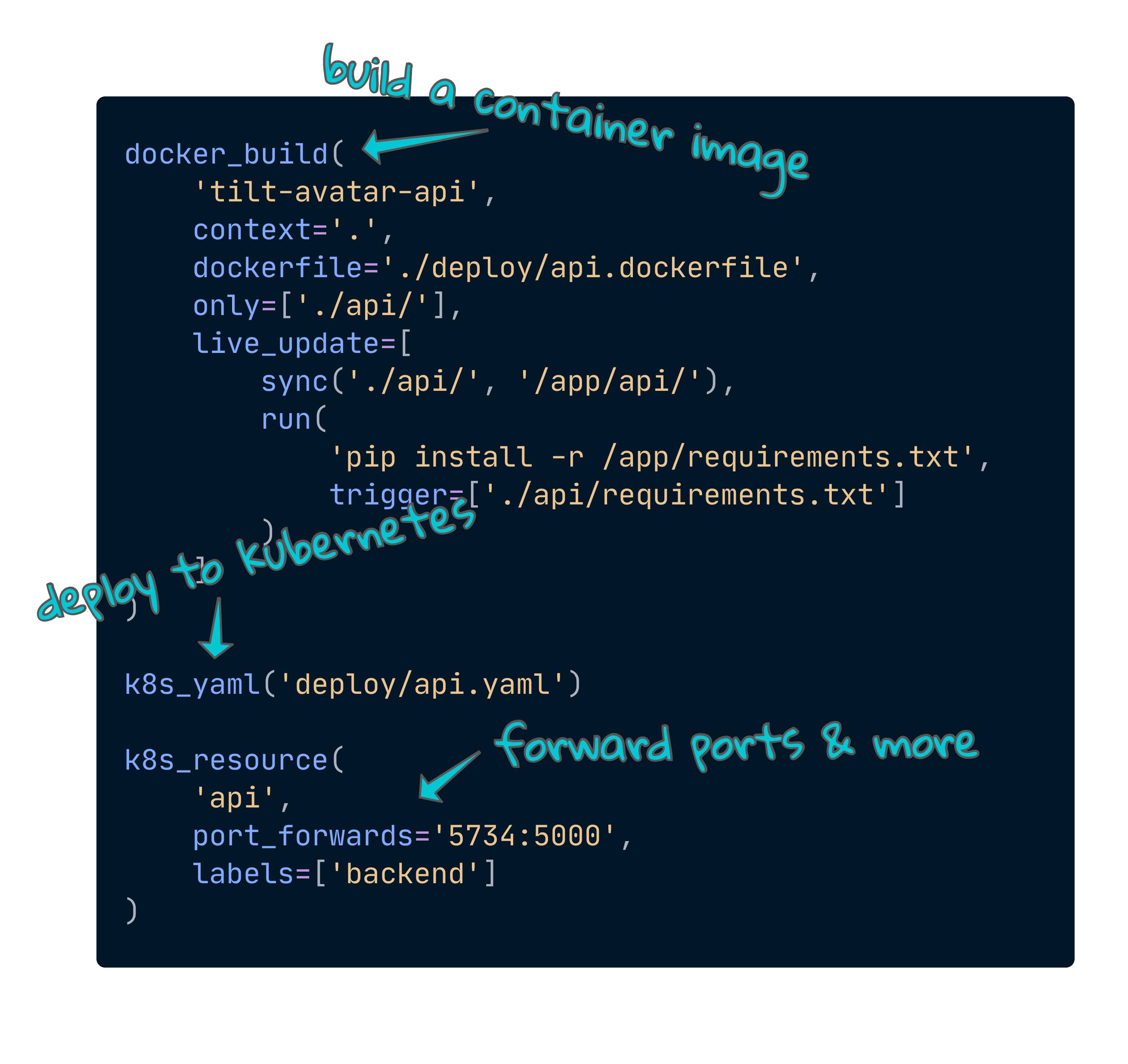

Execution is as simple as it gets. Everything lives in a Tiltfile, which controls how we're gonna build the application.

Here's a image from the Tilt docs that explains the initial concepts that we'll use:

Here's mine Tiltfile for this project:

Now, as you can see on the demo video, local development is much faster.

I simple type tilt up and everything is ready to go. 🎉

Wrapping up

By the end of this article, we have:

- A working Go REST API with two endpoints. One to generate fake invoices and another one to convert HTML strings into PDFs

- Docker and Kubernetes setup

- Local development workflow with Tilt

In a next piece, I plan to get into two things: testing strategies and deployment. I have no idea how to write proper tests with Go, so I have that to look up.

And, as expected, I don't even know how to begin a deployment for a K8s application. I have a rough idea that it'll require a VPS, I'll probably use a service like Digital Ocean.

Now, I can't wait to start working on the development again. I made a promise to myself that I wouldn't program anything anymore until I finished this. I did plan to finish it in March, so we're behind schedule. Can't wait to catch up.

Whew, I think this is my longest writing ever. Hope everything was clear and that I didn't do any mistakes.

If you do see anything weird or wrong, please feel free to point it in the comments. Yes! We now have comments as of this post.

Thank you so much for reading. I'll see you next time! 😊